Spotlight

AWS Lambda Functions: Return Response and Continue Executing

A how-to guide using the Node.js Lambda runtime.

Mon, 29 Feb 2016

Like any good engineering-driven culture, at Trek10 we constantly evaluate new technologies, tinkering with them to figure out how they might fit into our workflows and processes. Want to know what the coolest new framework we have been playing with recently has been? Serverless.

I started working with the framework when it was still called JAWS. This framework has had a significant impact for Trek10 in two ways - in our internal company operations and our external approach to client projects.

As a technology shop and AWS consulting partner, a lot of our internal processes have to do with things like monitoring infrastructure, responding to webhooks, processing sns notifications, crunching billing data (to figure out what reserved instances to recommend), and all sorts of other ad-hoc jobs. In the past, we have tried solutions such as Minicron and Docker & cron. The downfall is that they are trickier than they seem. At the start, the only requirement appears to be enough know-how and time to get a job working. Things quickly escalate into talking with our DevOps folks to get a server ready to go or asking for help with a Docker container set up and making sure that that the job is running properly. With all the setup and configuration management, what should have been simple to setup takes a little longer than just writing the script. Then, for proper care, one should add monitoring just to make sure it’s kept running like expected.

The fix for this is a little bit more structure into the process. After a few months of working with Serverless, we found it introduced enough process and convention that most of the team at Trek10 were able to create and manage their own processes and scripts.

The benefit to us as a company is that it allows us to move faster and build more complex scripts and jobs without introducing too much management overhead.

Some examples of processes that AWS Lambda & Serverless are powering right now at Trek10 include:

I don’t want to put the Serverless framework into the “only good for scheduled or ad-hoc jobs” box. We, as well as others, use it to power full software applications. What Rails is to Ruby, Serverless is to AWS Lambda & API Gateway. I should mention the broader goal of *Serverless - *to be the “Rails” to cloud event-driven computing in general.

Serverless is superb at building and managing the complete workflow of particularly microservice-based architectures. Like any proper service-oriented architecture, it allows you to manage your deployment at the service deployment level. Even more interesting, depending on the isolation level you need, you could even manage deployments at a particular function or endpoint level. This allows your developers to iterate and move fast. Rollbacks are also a snap.

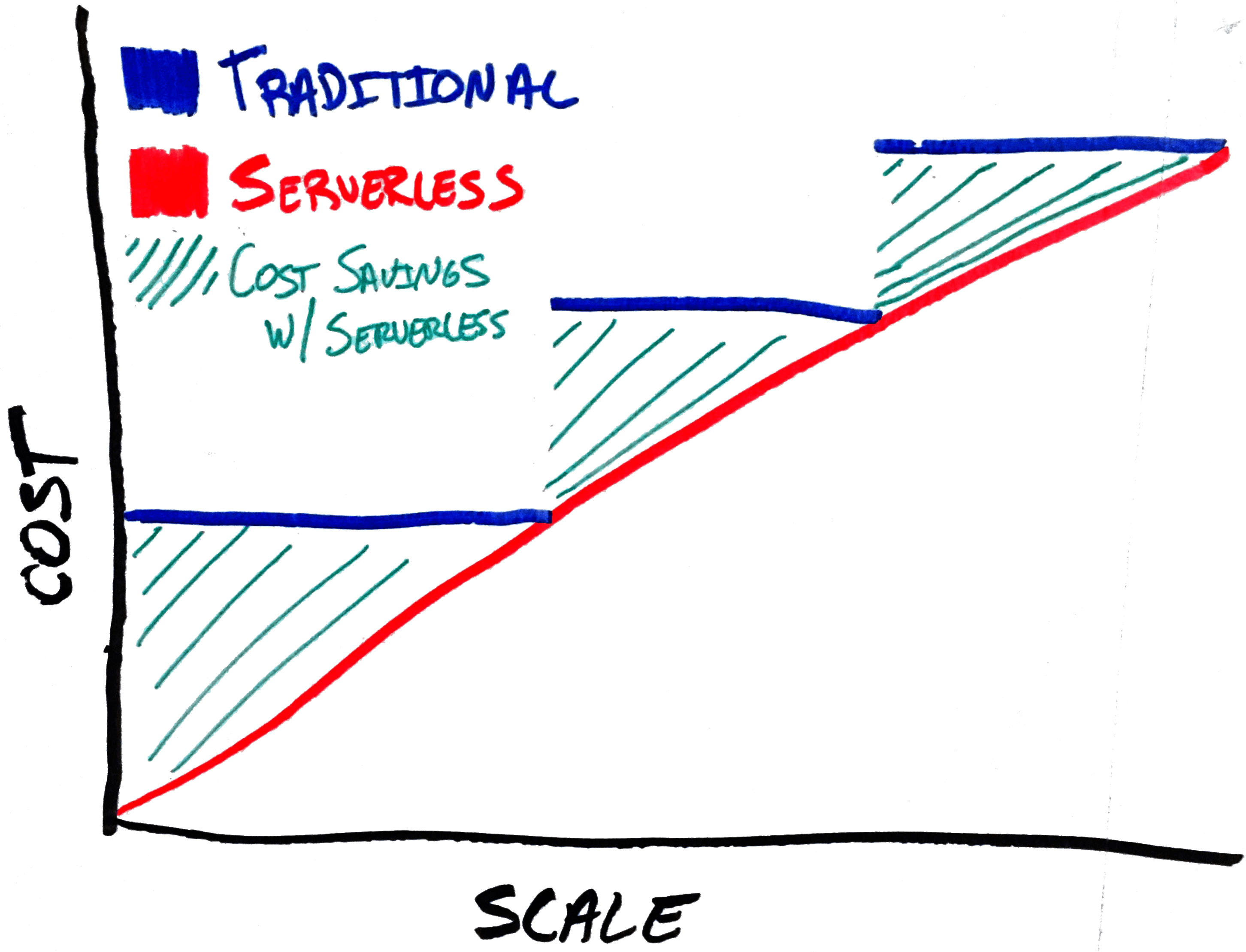

Another thing that can’t be ignored when building with the Serverless framework is the cost optimization that is realized because you’re only paying for the compute time that you use. Many projects don’t run 24/7. That means their servers are often idle. In the case of smaller projects, this can be as much as 90-95% of the time. In the Serverless framework, idle time is not charged for. As a result, smaller project applications can often run for free, or near free, for their lifetime, and many larger project apps can see significant cost reduction. Paying for the servers isn’t where the cost ends. Management of a project on a server also means proper monitoring and maintenance (security updates, log rotation, etc). Serverless reduces the overhead of running a small application whether use is light or heavy.

Some examples of projects that AWS Lambda & Serverless are powering at Trek10 right now:

We’ve been lucky enough to be able to work on Serverless applications where the scale is non-trivial. In other words, a scale for which the usage of auto-scaling groups would typically have been required and thought is put into the optimization of core functionality of the system because even minute optimization can result in large performance gains.

The most interesting realization with the Serverless framework we found is that there are new ways to look at old problems. For instance, when processing assets such as images, a typical architecture requires workers, a queue, and you would have to manage that entire system. Your workers should probably be a separate autoscaling group feeding off SQS or Redis. This adds at least two extra moving cogs in your system. With Serverless, the Lambda fanout pattern keeps everything neatly within the same systems, reducing your management and maintenance overhead.

With these new patterns, we found that we had to worry less about knowing something could scale and managing a worker pool and job queue, and could pay more attention to the detailed optimizations. In the example case of images, we could spend the extra time on getting image resizing just 10% faster.

Since we’re on the topic of scaling, I want to point out that by eliminating this concept of auto scaling groups, you don’t really have to worry anymore about things like spiky loads, surprise traffic, or the business morning rush bringing your services to a crawl. Lambda & API Gateway can scale almost infinitely. Scaling is nothing more than an issue of linear cost. More money is directly proportional to the additional scale.

A wonderful side benefit of the Serverless framework is that it forces people to live in the world of code-defined infrastructure since your entire application is code. The different stages of your application put your application in different regions; even migrating your application to an entirely new AWS account is pretty straightforward if you’re properly using the service framework and all of the tools that Serverless encourages and supports like CloudFormation. We find this can inspire business entities to potentially collaborate on projects that can be reused within the company. The Serverless framework also encourages the idea of shareable projects and components.

When I first ran across the JAWS (now Serverless) project, and understood what it was trying to achieve and how it was doing so, it helped me understand the true power of cloud event-driven computing. I brought it up to the team saying “This is it, this is The Next Big Thing™“.

I truly believe that we are just scratching the surface of the things that AWS Lambda can and will accomplish. I am sure that in the next few years we will see broader usage, bigger applications, and more powerful use cases in the event-driven computing world. I also believe we will see the Serverless framework, community, and similar efforts by others, building and maintaining the fundamental building blocks to enable everyone to harness Serverless’ raw, underlying power.

Things I would like to see built with Serverless:

SHARE

A how-to guide using the Node.js Lambda runtime.