Cloud Native

Control Tower: Then vs Now

Control Tower today is not the same Control Tower that you may have been introduced to in the past.

Eventually, you'll find a template is too large, too unwieldy, or has too many disparate resources. In the same way variables and functions move to separate namespaces as an application grows, you should expect the same thing to happen to your infrastructure eventually.

When this happens, usually it's best to start splitting templates along service-like lines. Within services, there are further lifecycle-related divisions that can be good boundaries for further splitting. Often stacks can split between network and data store, or functions and event sources.

If you have a tightly bound system, nested stacks are great. Nested stacks let you take several related CloudFormation stacks and deploy them as a unit. For things shared between multiple services, it's usually recommended to have the underlying system (such as VPCs or peering connections) deploy separately because they have a different lifecycle and don't fit neatly in a single service.

Different teams can own these stacks, or they might need be separated for other reasons. For these functionally-separate stacks, avoid nesting and see below section on "Sharing Values for Multi-Stack."

Even when breaking up a stack into nested stacks, understand that there is inherent complexity in sharing large numbers of outputs. You need to remember to take the output from Stack A and plug it into a parameter in Stack B, and removing the item that created that output becomes the multi-step process as you'll need:

This process is most often a problem for container-type resources like security groups.

Often the child stack has an entity in that group, which causes the

cleanup on that resource to fail. There are workarounds for this involving

RetentionPolicy, but this means that you need to add processes to delete

those orphaned groups eventually. All of these workarounds come down

to not having CFN fully clean up deleted resources so the next stack can move

the resources from the old to the new resource.

Once you've decided where the right place is to split up your resources, it's time to decide what to do about values that you do need to share. Most often these are IDs of things like VPC subnets, security groups, DynamoDB tables, or hostnames of load balancers.

There are as many ways to share values, and each provides a way to trade between mutability, reusability, isolation, and flexibility.

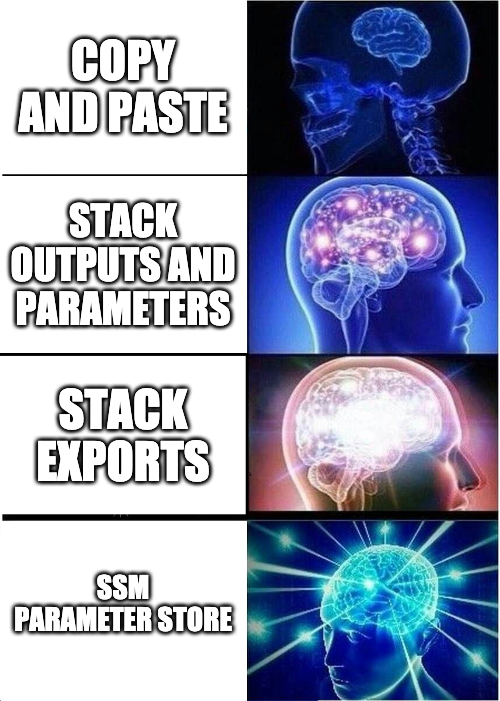

As in every other part of AWS, the most straightforward strategy is to not have one. Copy and paste values into individual stack parameters as you need them. This lack of a strategy comes with all the overhead and errors of any manual copy-paste based process. Values get outdated, people forget where the value came from, and providers don't know where their values are used.

Surely this isn't state of the art!

This method relies on using standard stack parameters/outputs and transferring values via a higher-level orchestration system like Ansible. This option has the added benefit of also letting you develop ordered dependencies across stacks, or deploying only a subset of stacks and stopping at a checkpoint.

If you want to use CloudFormation for this purpose, you can use substacks and pass outputs form Substack A to parameters of Substack B.

# parent.yml

Resources:

SubstackA:

Type: 'AWS::CloudFormation::Stack'

Properties:

TemplateURL: substacks/a.yml

SubstackB:

Type: 'AWS::CloudFormation::Stack'

Properties:

TemplateURL: substacks/b.yml

Parameters:

DataGoesHere: !GetAtt SubstackA.Outputs.MyDataStore

# substacks/a.yml

Resources:

Bucket:

Type: 'AWS::S3::Bucket'

Outputs:

MyDataStore:

Value: !Ref Bucket

# substacks/b.yml

Resources: {}

Parameters:

DataGoesHere:

Description: where to store the query output from the FrobKnobulator

The same thing is possible using Ansible's cloudformation module, with the

addition of the possibility of pausing between stack deploys to do something

else. In the case of the CloudFormation parent/child stacks, a failure when

creating Substack B would cause a rollback of Substack A as well. This method isn't

necessarily wrong, but it does require you to be able to roll back a

deployment strictly in CloudFormation. This example uses the

cloudformation.

- cloudformation:

stack_name: StackA

state: present

template: "files/substacks/a.yml"

register: stack_a

- debug:

msg: "Other tasks can go here, enabling more complex deploys"

- cloudformation:

stack_name: StackB

state: present

template: "files/substacks/b.yml"

template_parameters:

DataGoesHere: '{{ stack_a.outputs.MyDataStore }}'

register: stack_b

Stack exports use a CloudFromation intrinsic called !ImportValue to use the

value, and adding the import anywhere you want in any stack you choose. The

names are regionally scoped and cannot be easily copied across regions without

replicating the entire structure (all the stacks, basically).

# parent.yml

Resources:

SubstackA:

Type: 'AWS::CloudFormation::Stack'

Properties:

TemplateURL: substacks/a.yml

SubstackB:

Type: 'AWS::CloudFormation::Stack'

Properties:

TemplateURL: substacks/b.yml

# substacks/a.yml

Resources:

Bucket:

Type: 'AWS::S3::Bucket'

Outputs:

MyDataStore:

Value: !Ref Bucket

Export: MyDataStore

# substacks/b.yml

Resources:

InsightVm:

Type: AWS::IAM::ManagedPolicy

Properties:

ManagedPolicyName: SomethingS3Read

Policies:

PolicyDocument:

Version: '2012-10-17'

Statement:

- Action:

- s3:List*

- s3:GetObject

Resource:

'Fn::Sub':

- 'arn:aws:s3:::${TheBucketName}/*'

- TheBucketName:

"Fn::ImportValue": MyDataStore

Effect: Allow

An issue or feature with exports is that they are immutable. If you export something and it is used anywhere you can't reliably change that value or remove it from the stack. This rule makes stack exports rigid, so they can be challenging to use in environments that change often.

As the name suggests, Systems Manager Parameter Store is very good at storing parameters for stacks and all manner of other things. In any template, you can make a new SSM parameter:

VPC1IDSSM:

Type: AWS::SSM::Parameter

Properties:

Description: !Sub 'VPC ID from stack ${AWS::StackName}'

Name: '/network/main/vpc-id'

Type: String

Value: !Ref VPC

Then, in any other template, take that value as a parameter

Parameters:

TargetVpc:

Type: AWS::SSM::Parameter::Value<AWS::EC2::VPC::Id>

Default: /network/main/vpc-id

This way, you have the capability to use SSM parameters from other stacks within

the same region, but also the option to add SSM parameters from other systems

such as Ansible. Using the parameter's path only (not the actual value)

doesn't require you to do the plumbing of actually retrieving values and

supplying them as a stack parameter. The downside is that if you have a

SecureString parameter, you can't use it this way.

There are other ways to share Parameter Store values beyond using them as stack

parameters. You can use Dynamic Resolvers instead to capture values

from Secrets Manager or SSM Parameter Store (including SecureStrings). This

method uses a special {{reslove:ssm:/my/value:1}} syntax. Note the last field

where you must specify a version. This means that, like Stack Exports, you may

have problems in highly dynamic environments. If the value changes, the places

where you resolve also must change their version to get the new value.

Best of all, Parameter Store lets you open up a discussion within your team about naming things. Much like the color of a bike shed, these naming conventions are rarely controversial.

There's no "One True Path" for how to structure your Infrastructure-as-Code. Every way has tradeoffs between centralized management (usually by the CumuloNimbus Team) and customizability. In the same way that different organizations typically have their own conventions for how to split services across repositories, every shop tends to strike a balance that works for them.

Still uncertain? The most flexible way to start is via SSM Parameter Store. Parameters can be in separate stacks without requiring deploying your stacks together. Parameters can also be used (or created) with tools other than CloudFormation, which is great if parts of your team prefer Terraform, Ansible, or bash scripts.

Control Tower today is not the same Control Tower that you may have been introduced to in the past.